hlockwood

Well-known

Harry,

Having confidence in QM is no different than having confidence in gravity. Being competent at QM is profoundly boring. If you have the right model and can perform the calculations, you can predict every experimental result involving coherent phenomenon to within the accuracy of the apparatus.

The problem is: Unlike gravity, QM is fundamentally non-intuitive. QM defies common sense. Human brains are not compatible with how QM works. This is what Feynman meant (besides the classic "how" vs. "why" cliche).

A not so minor point; I said "confidence in his knowledge" not "confidence in QM". I have the latter but not the former.

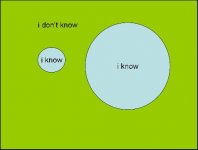

Just for the hell of it, I'll throw in my model of QT knowledge.

First level for the newly exposed: FUD, fear, uncertainty, doubt.

Second level: " I don't really don't understand it, but it works beautifully. So, I'm satisfied."

Third level: Arrogance. "I understand it."

Fourth level: The highest level. "I don't understand it, and no one else does either." This is where you'll find people like Feynman.

Beating of dead horse finished.

Harry